IEEE Smart Grid Interviews

Interview with Poorva Sharma and Ron Melton

Poorva Sharma is a Software Engineer with Computational and Data Engineering group at PNNL. She specializes in developing new software tools in the field of knowledge management, distributed systems, integration systems, and streaming middleware. Her work focuses on developing integration solutions for power grid and building energy research. She worked on multiple projects under PNNL’s Future Power Grid Initiative and developed GridOPTICS™ Software System (GOSS). She is currently working on various projects under DOE’s Grid Modernization Lab Consortium and PNNL’s Connected Homes initiatives. She holds B.E. in Computer Science and currently pursuing M.S. in Computer Science from Washington State University.

Poorva Sharma is a Software Engineer with Computational and Data Engineering group at PNNL. She specializes in developing new software tools in the field of knowledge management, distributed systems, integration systems, and streaming middleware. Her work focuses on developing integration solutions for power grid and building energy research. She worked on multiple projects under PNNL’s Future Power Grid Initiative and developed GridOPTICS™ Software System (GOSS). She is currently working on various projects under DOE’s Grid Modernization Lab Consortium and PNNL’s Connected Homes initiatives. She holds B.E. in Computer Science and currently pursuing M.S. in Computer Science from Washington State University.

Ron Melton is the Group Leader of the Distributed Systems Group in the Electricity Infrastructure and Buildings Division, a Senior Power Systems Researcher and Project Manager at the Pacific Northwest National Laboratory (PNNL). He is the Principal Investigator for the DOE Advanced Grid Research project for an ADMS Open Source Platform, a member of the core team for the DOE Grid Architecture project, and Administrator of the GridWise® Architecture Council. He was the Project Director of the Pacific Northwest Smart Grid Demonstration that concluded in June 2015. He has 10 years of experience in cybersecurity for critical infrastructure systems and over 30 years of experience applying computer technology to a variety of engineering and scientific problems. Dr. Melton is a Senior Member of the Institute of Electrical and Electronics Engineers and a Senior Member of the Association for Computing Machinery. He chairs the IEEE Power and Energy Society Smart Buildings, Loads, and Customer Systems Technical Committee and the Smart Electric Power Alliance Grid Architecture Working Group. Dr. Melton received his BSEE from University of Washington and his MS and PhD in Engineering Science from the California Institute of Technology.

Ron Melton is the Group Leader of the Distributed Systems Group in the Electricity Infrastructure and Buildings Division, a Senior Power Systems Researcher and Project Manager at the Pacific Northwest National Laboratory (PNNL). He is the Principal Investigator for the DOE Advanced Grid Research project for an ADMS Open Source Platform, a member of the core team for the DOE Grid Architecture project, and Administrator of the GridWise® Architecture Council. He was the Project Director of the Pacific Northwest Smart Grid Demonstration that concluded in June 2015. He has 10 years of experience in cybersecurity for critical infrastructure systems and over 30 years of experience applying computer technology to a variety of engineering and scientific problems. Dr. Melton is a Senior Member of the Institute of Electrical and Electronics Engineers and a Senior Member of the Association for Computing Machinery. He chairs the IEEE Power and Energy Society Smart Buildings, Loads, and Customer Systems Technical Committee and the Smart Electric Power Alliance Grid Architecture Working Group. Dr. Melton received his BSEE from University of Washington and his MS and PhD in Engineering Science from the California Institute of Technology.

In this interview, Poorva and Ron answer questions from their webinar, A Standardized API for Distribution System Control and Management, originally presented on June 25, 2020.

UtilityAPI has an OpenAPI 3.0 REST API. How does this relate to their API, and why did you not chose to use OpenAPI 3.0 for your API?

OpenAPI 3.0 or Swagger is a tool to define, document, and interact with RESTful APIs. GridAPPS-D APIs are based on the publish-subscribe mechanism and not REST. APIs using publish-subscribe technology gives us more flexibility to create applications that are data and event driven. We could use OpenAPI 3.0 to document GridAPPS-D API but not to implement the API themselves.

UtilityAPI is focused on exchange of specific data related to utilities related to customer billing and not system operations of the distribution network. GridAPPS-D might take advantage of UtilityAPI or similar APIs to access metering data from back office systems.

What are the commercial ADMS vendor platforms that have reference implementations of GRIDApps-D today?

Currently there are not commercial platforms that have implemented the GridAPPS-D API. There are multiple vendors involved in the GridAPPS-D Industrial Advisory Board and discussions with some of them about implementing GridAPPS-D compliance in their products.

There was a lot of mention about CIM in this presentation. Can you explain a bit about "MultiSpeak" as well? What are the pros and cons against going with CIM vis-a-vis MultiSpeak?

MultiSpeak is an information model based standard developed by the National Rural Electric Cooperative Association. Elements of MultiSpeak are often used by co-ops and public utilities. There are harmonization efforts between the CIM and MultiSpeak underway. The CIM was created to represent network models from the outset. MultiSpeak began as a back-office integration standard and added network model capabilities. Both are having to address representations of distributed energy resources. Our long-term objective is that the standardized GridAPPS-D API be able to work with either type of network model.

How do you include predictive analytics, and what type of applications are available through GridAPPs-D?

Predictive analytics would typically be an element of a GridAPPS-D application rather than a service of the platform itself. Two of the example applications have predictive analytics elements.

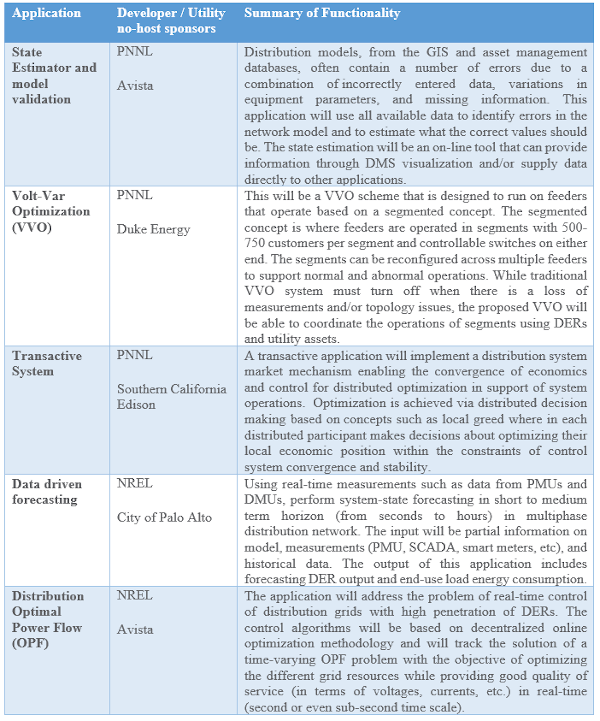

GridAPPS-D application run in their own Docker containers. This gives them control over defining their execution environment. Current integrated applications are listed in the table below.

I really have to commend your team for having docker implementations for GridApps-D on Github. Can you produce more documentation for us to "play" with those sample applications so that enthusiasts like me can "play around"?

GridAPPS-D documentation is available on the GridAPPS-D website. The default installation of GridAPPS-D comes with a sample application. Steps to install and run a simulation with a sample application are provided under “Installing GridAPPS-D” and “Using GridAPPS-D” menu items. Any issues, bugs, or feature requirements can be reported here or you can send an email.

You talked about a CIM counterpart for communications network modeling. Could you please talk more about the options you have explored and the challenges?

Unfortunately, this is not a topic for which we yet have an answer. We plan to include communications system modeling using ns-3 as a GridAPPS-D capability. This will enable applications to explicitly include communications system components. In order to extend the API in a manner consistent with the interaction with power system network components we need an information model based communications system representation similar to the CIM for power system networks. We have heard that such models exist but have not yet identified such a model ourselves. Any information about such a model will be appreciated.

Are there other database technologies besides triple store that one might use?

GridAPPS-D is datastore technology agnostic. Any data store can be used as long as it is compliant with the API. Power grid model APIs that are currently implemented using triple-store are listed here. The project did an analysis of DBMS technologies that considered RDBMS, Graph, and Triple-Store technologies. Triple-store was chosen based on that analysis.

What technologies are required to support the standardized API?

The standardized API is purposely technology agnostic. Any combination of messaging and data management technologies that can comply with the API specification can be used.

An application can use the standardized API by using the base Docker container provided by GridAPPS-D that allows the application to register with the platform. Then the application can use language specific ActiveMQ library to connect and use pub-sub APIs with GridAPPS-D platform. GridAPPS-D also provides a wrapper library and a sample-app to be used and extended by Python based applications.

Who can use GridAPPS-D?

As an open-source platform, GridAPPS-D is available to any interested party through the github site. Links are available on our website: gridapps-d.org

How do I get involved with GridAPPS-D?

You can send us an email or visit our website.