Hybrid Machine Learning for Analyzing Power System Vulnerabilities to Random Cascading Outages

By Tao Ding and Mohammad Shahidehpour

Machine learning methods, which include deep learning, reinforcement learning, neural networks, etc., have been successfully applied to smart grid studies. These methods are used to (1) forecast load curves, photovoltaic and wind power outputs, spot electricity prices, voltage collapse, and power system vulnerabilities; (2) schedule midterm events like fuel purchases, and preventive maintenance outages; and (3) maintain the security, enhance the economics, and optimize decisions in electricity markets. Machine learning applications to contingency analyses have included a fuzzy inference data fusion technique that is not affected by fault types and severity and asynchronous data processing which is applied to improve the fault location accuracy. Machine learning was also applied to resilience analyses and power system hardening to determine the impact of hurricanes and other severe weather conditions, where power system components were divided into damaged and operable and a single classifier was trained as input to the machine learning algorithm using the transient energy function to obtain decision boundaries. Deep reinforcement learning applications included adaptive emergency control schemes to manage data variations and uncertainties in power systems.

A cascading outage simulator (CS) was developed via optimization and statistical methods. A nonlinear convex optimization model solved by saddle point dynamics was established for forecasting the cascading outage path, which could change cascading outages by adjusting the injected power. A dynamic programming model was developed, focusing on identifying key network branches and initial disturbances that caused cascading outages. A risk identification algorithm was introduced based on the maximum value principle. Then, risk constraints were added to manifest the impact of cascading outages in the economic dispatch model, and a risk management optimization model was proposed to balance economy and risk. Also, a statistical method was used to identify critical network devices based on large data for cascading outages. Accordingly, a state-outage network model with the empirical probabilities was formulated to reduce the blackout risk resulted from cascading outages.

A kernel fuzzy C-means method was proposed to forecast the outage chain, which located key components and interactions among cascading outages. To identify key components and quantify corresponding impacts, a probabilistic model was introduced to identify the propagation patterns of cascading outages. Extending the above single-layer network, a multi-layer interactive graph was used to forecast outage propagations and search mitigation measures, which could more effectively identify the critical components that affect propagations among layers. However, changes in initial boundary conditions of CS might lead to different results. Therefore, it is very time-consuming and impractical to conduct CS for all possible initial boundary conditions in online applications. In order to solve this problem, the machine learning methods were employed to analyze the impacts of uncertainties on CS.

The machine learning method was applied to demonstrate its effectiveness in power system data mining for vulnerability analyses and outage management. The event-based load shedding problem was hierarchically modeled as a multi-output classification subproblem for identifying the best load shedding locations, and a regression subproblem was developed to forecast the minimum load shedding quantities. Machine learning was used to develop a hierarchical classification and regression approach to achieve a better forecasting for outages in power system operations. However, using the hierarchical method, the training precision by directly applying the regression model was poor due to the following two reasons: (1) The number of features (i.e., permissible states of bus generation and load) was generally high. Therefore, the solution accuracy might suffer from the over-fitting of the available training data. (2) Many initial states in the training set did not lead to load shedding. Therefore, the machine learning model might take on an uneven output distribution with respect to input parameters. One way to address the above challenges is to apply a hybrid machine learning model using a combined classification and regression method.

Hybrid machine learning applications to power system vulnerability analyses:

Recent years have witnessed several blackouts in electric power systems with substantial socioeconomic impacts worldwide. Many of these blackouts were caused by local faults which then propagated by triggering cascading outages in multiple geographical regions. The machine learning model with random training data can be applied to find the relationship between input initial states and the power system vulnerability (i.e., emergency load shedding), where the machine learning method can train the model in an off-line stage, and then use it for on-line vulnerability applications. Further, a hybrid machine learning model can be established with combined classification and regression models, where the classification model by Support Vector Machine (SVM) is employed to judge whether the cascading outage will result in any load shedding. Then, the regression model using the Gradient Boosting Regression (GBR) method is employed to describe the relationship between input features and the output indicators only for cases where load shedding in prescribed. The CS application is considered for tracking the local fault propagation and analyzing the power system vulnerability to such incidents. The mainstream component of CS includes complex network theory applications to power flow analyses. The complex network theory treats a power system as a model with a large number of components, considers interactions among corresponding components, and analyzes its critical characteristics in regard to cascading outages.

The power flow analysis method divides the continuous fault process into several stages and calculates the power flow recursively to find the overloaded lines. An AC power flow model considering frequency deviation is considered to calculate frequency variations under cascading outages, which can accurately capture outage propagation patterns. A DC power flow is used for CS due to its efficiency and simplicity, which also considers control and communication networks constraints. Accordingly, the interdependence of power and communication networks is quantified by an interactive cascading model, indicating that a greater interdependence would lead to a lower probability of power outages. A Markovian-tree-based multi-timescale model is set up for simulating cascading outages, and a forward-backward searching strategy is employed to speed up the simulation. A multi-timescale dynamic simulation model is established with a sensitivity-based redispatch strategy.

The hybrid machine learning model observes the fact that many initial states will not lead to load shedding, and employs the classification model to remove the cases without load shedding. A cascading outage model is applied using the complex network theory, which concerns electrical load characteristics for analyzing network vulnerabilities. A robustness indicator is introduced to analyze the impact of cascading outages, which demonstrates that smaller attacks and larger loads can reduce the risk of cascading outages. The Galton-Watson branching process method is introduced in CS to estimate the cascading outage trail and the corresponding blackout size. A Lagrange-Good inversion based multi-type branching process method is used to quantify the blackout propagation and analyze the interdependencies among different infrastructure systems.

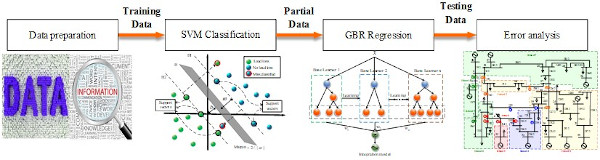

The flowchart of the hybrid machine learning approach is shown in Figure 1, which includes the following four steps:

Step 1: The CS is implemented which uses off-line AC power flows for generating training data. The initial states are randomly selected and the CS model is deployed for each initial state, where power system generation and loads are adjusted dynamically and power flows are redistributed to quantify the vulnerability metric.

Step 2: The SVM algorithm is adopted to classify the training data into two sets of with and without load shedding.

Step 3: GBR is applied to the load shedding data to determine the relationship between input power outage states and the vulnerability metric.

Step 4: The cross-validation method is deployed to resample the data set, evaluate the proposed machine learning model, and quantify the relative error between forecasted regression and actual values.

Fig. 1 Flowchart of the hybrid machine learning algorithm as a CS in power systems

This article edited by Jose Medina

For a downloadable copy of the November 2020 eNewsletter which includes this article, please visit the IEEE Smart Grid Resource Center.

To have the Bulletin delivered monthly to your inbox, join the IEEE Smart Grid Community.

Past Issues

To view archived articles, and issues, which deliver rich insight into the forces shaping the future of the smart grid. Older Bulletins (formerly eNewsletter) can be found here. To download full issues, visit the publications section of the IEEE Smart Grid Resource Center.